Reverse proxies have been around for a very long time and depending on your application either interesting additions or a key element to your architecture.

Despite their long history I was recently reminded of some interesting applications of Caching reverse proxies that are worth revisiting.

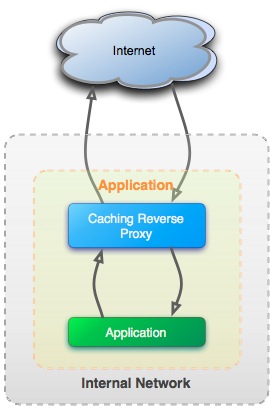

First lets look at a fairly typical configuration where a caching reverse proxy is fronting some sort of web application. The web application exposes its functionality on one port (say 8080) and the proxy connects to this port for in-bound HTTP requests (say on port 80). The application responds with the data matching the request and if cacheable the proxy stores a copy of that content in its cache. There are lots of other paths - typically around failure and different responses but essentially the proxy acts as a pipe between the Internet and the application.

Basic Caching Reverse Proxy configuration

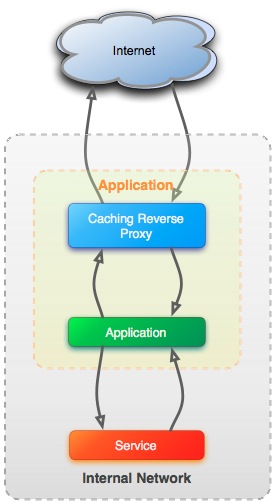

Lets assume that the application makes use of some internal services on the corporate network. Typically these might be HTTP based web services. This sort of arrangement is fairly common and quite often the design stops here.

Basic Caching Reverse Proxy with internal service

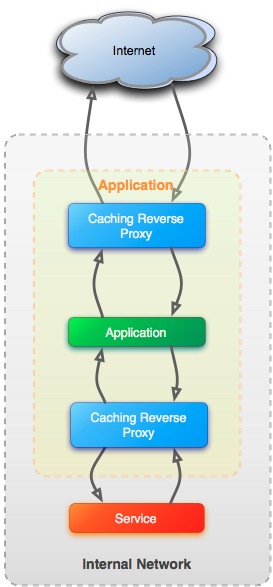

Since we are using HTTP to communicate between the web application and some internal web service we can add a reverse proxy between the application and the service. In doing so we have added a layer of insulation. Lets assume that the proxy is installed and configured on the web application side. It has been configured to honor cache headers and to serve stale content should something go wrong.

This insulating capability can then be leveraged to to provide a degrading service during updates and system failures.

Caching Reverse Proxy used to insulate from service failures.

Should the service be unavailable for any reason content that is already in the cache can be used. Updates and other non-cached operations would fail as usual but for a large group of web applications this may be acceptable and presenting the user with information about the problem and that it is being worked on may be all that is required.

Just like insulation the proxy does not block all errors propagating from the service, but in the right circumstances it can lessen the blow of something going wrong.

Attributes of this design

These are just some of the attributes that I think apply to this design.

- Multiple requests resulting in the same cacheable service request (by URL) results in a single service call.

- Simpler application design. The application need not worry about caching service call results.

- Resilient to intermittent back-end service failure

- Improved performance

- Reduced service call bandwidth requirements

- Lower load on service calls.

- More complex configuration and deployment process

- Slightly increased latency for in-bound and out-bound requests.